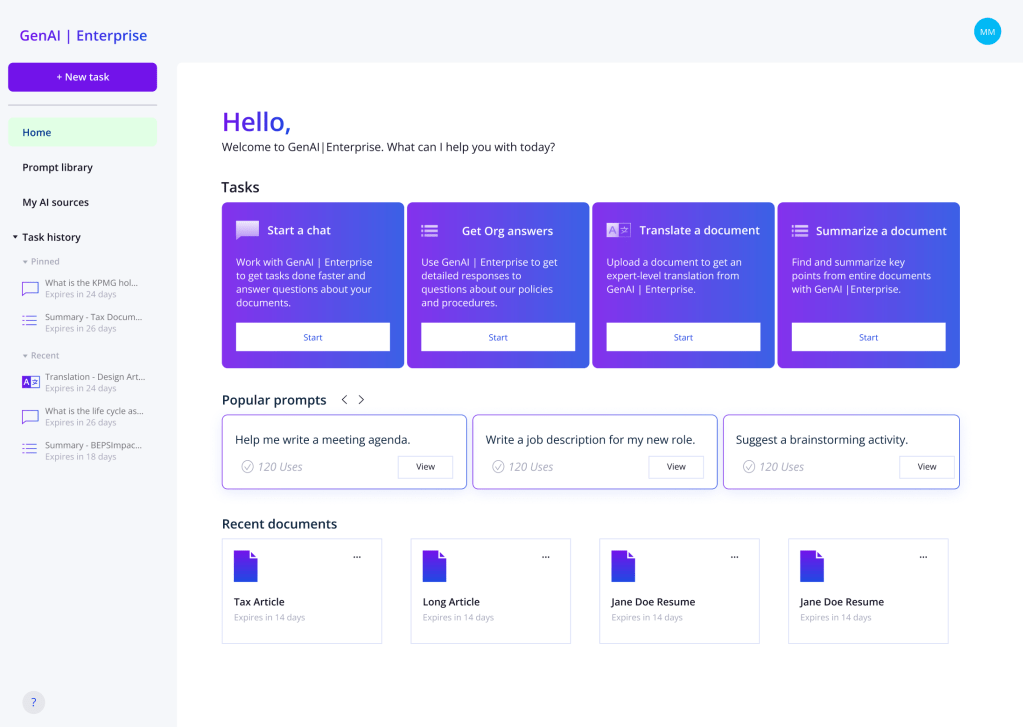

Virtual Workplace Assistant

From August 2023 to January 2024 I worked on a virtual assistant platform that brought the power of generative AI and large language models to employees at a privacy-focused international financial firm.

Establishing a Baseline

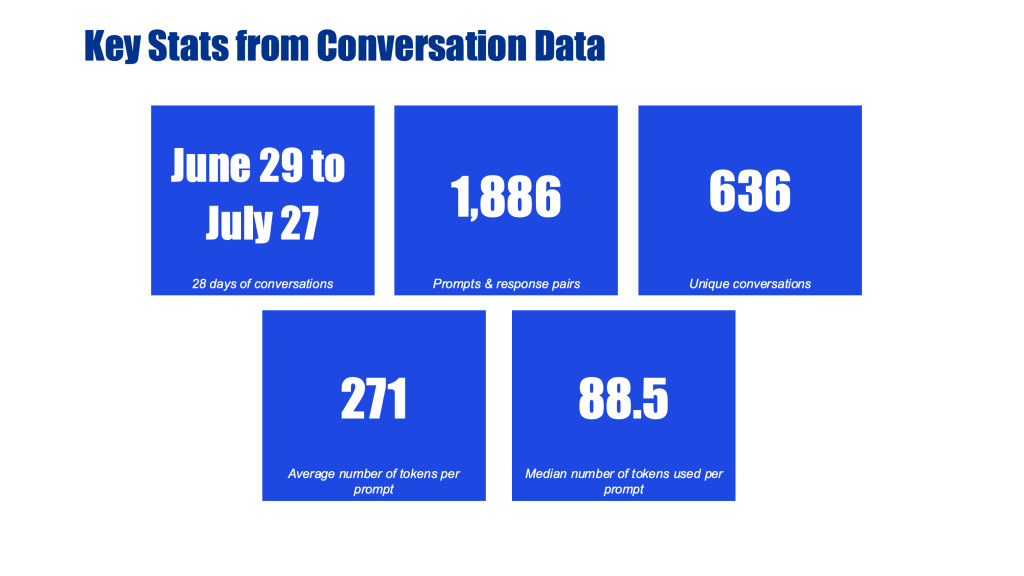

Before I came onto the project, a beta version of the platform had already been shared with a subset of the employee population (about 150 users). In preparation of the prototyping phase, I analyzed data logs to identify how users were interacting with the platform—what tasks were being performed most often and what features might be confusing or misleading.

I highlighted several discoveries that would influence the final version of the product, including its overall information architecture.

User Interviews and Journey Mapping

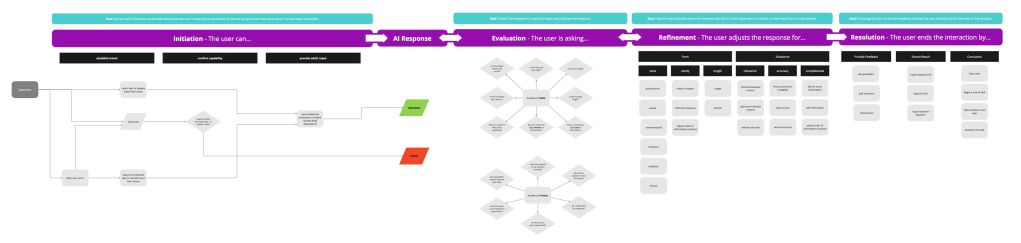

As we began building a prototype, we held user interviews to understand not only what users were doing with the platform, but what they expected to be able to do. This was new technology for everyone, so it was important to build the platform to communicate its strengths as well as its weaknesses. We needed to take into account the misconceptions that users had and emphasize the platform’s capabilities.

Building on the analysis from the data logs and interviews, I developed a standard user journey diagram for GenAI interactions. We used this to evaluate different tasks and build solutions to simplify key behaviors on the platform.

Accessibility and Style

Accessibility standards for generative AI had not yet been set by the industry. To fill this gap, I set accessibility expectations for the new platform based on widely-accepted guidance for similar technologies.

Not only was generative AI technology new to everyone, so was the language we used to describe it. Before we began releasing the platform to a wider user base, I wrote a set of guidelines to ensure that we communicated about the platform and its underlying technology accurately and consistently to encourage users to make the most out of the platform.

These guidelines shaped both the in-product language as well as internal communication and promotional material about the platform.

Defining a Content Governance Process

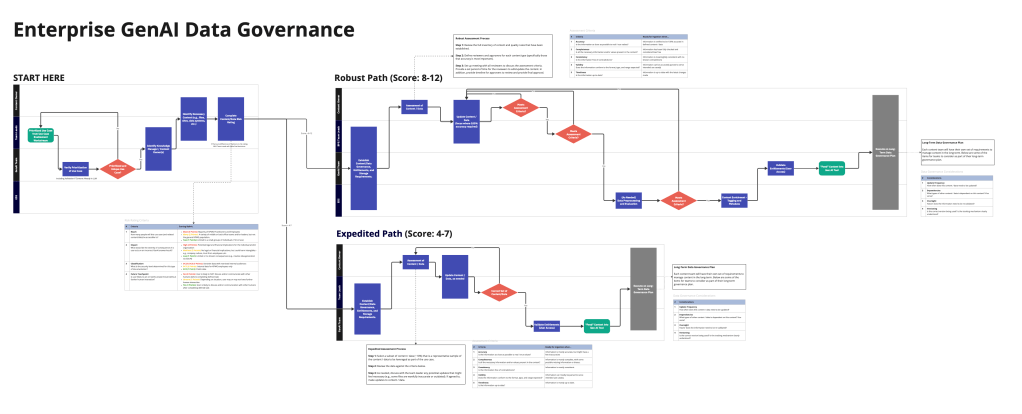

One of the core features of the platform was its ability to function as an enhanced search engine, answering questions about official documents and content. Careful consideration needed to be given about what content was uploaded to the system to avoid hallucinations and incorrect information retrieval. To mitigate these risks I developed a process to evaluate and review content before it was uploaded to the system.

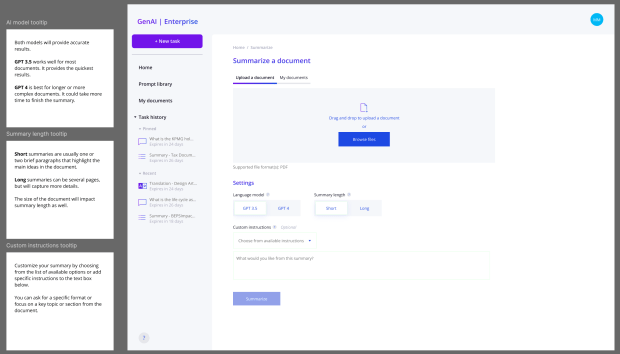

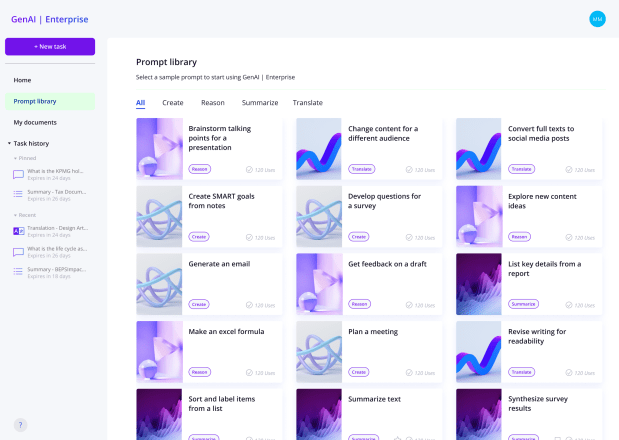

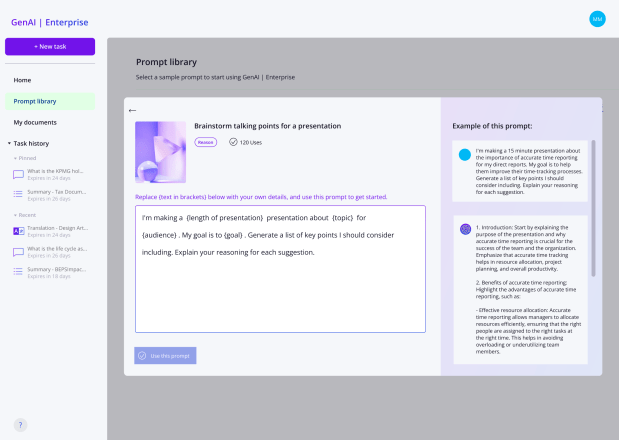

Writing UX Copy and Prompt Samples

As the screens for the prototype were being finalized, I wrote the UX copy for what would become the final version of the platform. This included introductory text, tooltips, and a set of standard prompt templates whose structure I designed so that key words and phrases could be matched to employee and business needs.